Editor’s note: This update to a 2019 review of OCR tools is cross-published on Source. The original review was designed and written by DocumentCloud alumni Ted Han and MuckRock COO Amanda Hickman. A lot has changed since then—enjoy!

OCR, or optical character recognition, allows us to transform a scan or photograph of a letter, form or court filing into searchable, sortable text that we can analyze. In the four years since Source last reviewed the available options for OCR technology, the field has transformed.

MuckRock Foundation, home to DocumentCloud and MuckRock, uses a lot of OCR in our work. On DocumentCloud, which journalists use to analyze, annotate, and publish primary source documents, we run thousands of pages through Tesseract’s free and open source OCR software and make a handful of proprietary alternatives available as Add-Ons. Our public records request service, MuckRock, scans and publishes documents received through FOIA and local public records laws.

To celebrate the rollout of our latest Add-Ons, which allow users to run documents through Google Cloud Vision OCR and Azure Document Intelligence, we thought it was a good time to revisit Ted and Amanda’s 2019 review. What we found was promising: we see notable improvements in accuracy, speed, and versatility.

As we sought to reprise the initial review, we were struck by a few shifting dynamics in the field. Several open-source OCR projects that once thrived have sadly fallen out of maintenance or fallen behind in advancements, highlighting the challenges of sustaining community-driven initiatives over time. On the flip side, we’ve witnessed the rise of exciting new OCR tools built upon machine learning-based open-source frameworks, demonstrating the power of collaborative development in this era. Furthermore, the market has seen proprietary OCR solutions mature, showcasing the increasing integration of OCR into cloud-based services.

During our review, we carefully evaluated numerous OCR tools, encompassing both open source and proprietary options. Ultimately, we narrowed down our selection to five top contenders, comprising two free open-source tools, Tesseract and docTR, and three proprietary solutions: Amazon Textract, Azure Document Intelligence, and Google Cloud Vision.

We refined our selection by applying specific criteria, primarily assessing ease of use, the quality of OCR results across our test documents, and the consistency and maintenance track record of the chosen tools. Each of these OCR systems has been integrated into the DocumentCloud interface natively, as is the case with Tesseract and Amazon Textract, or made available as a DocumentCloud Add-On, including Google Cloud Vision, Azure Document Intelligence, and docTR.

No coding or technical knowledge is needed to use these tools and check our work. To use the cloud-based services, however, you will need to have access to DocumentCloud premium to run the Add-Ons, or fork the repository for the Add-Ons and substitute in your own credentials. DocumentCloud and all of its Add-Ons are open source and open to contribution.

Our tests

In the interest of testing each tool on some of the real-world examples that we encounter in our work, we came up with a list of sample documents, including:

-

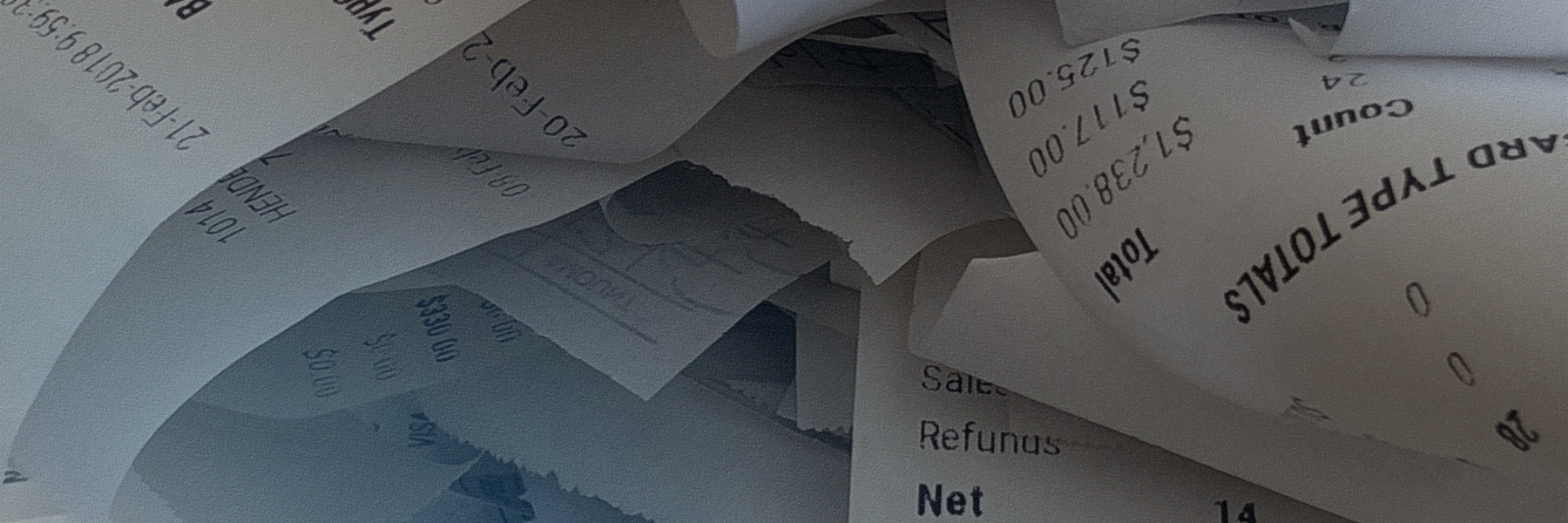

A receipt — This receipt from the Riker’s commissary was included in States of Incarceration, a collaborative storytelling project and traveling exhibition about incarceration in America.

-

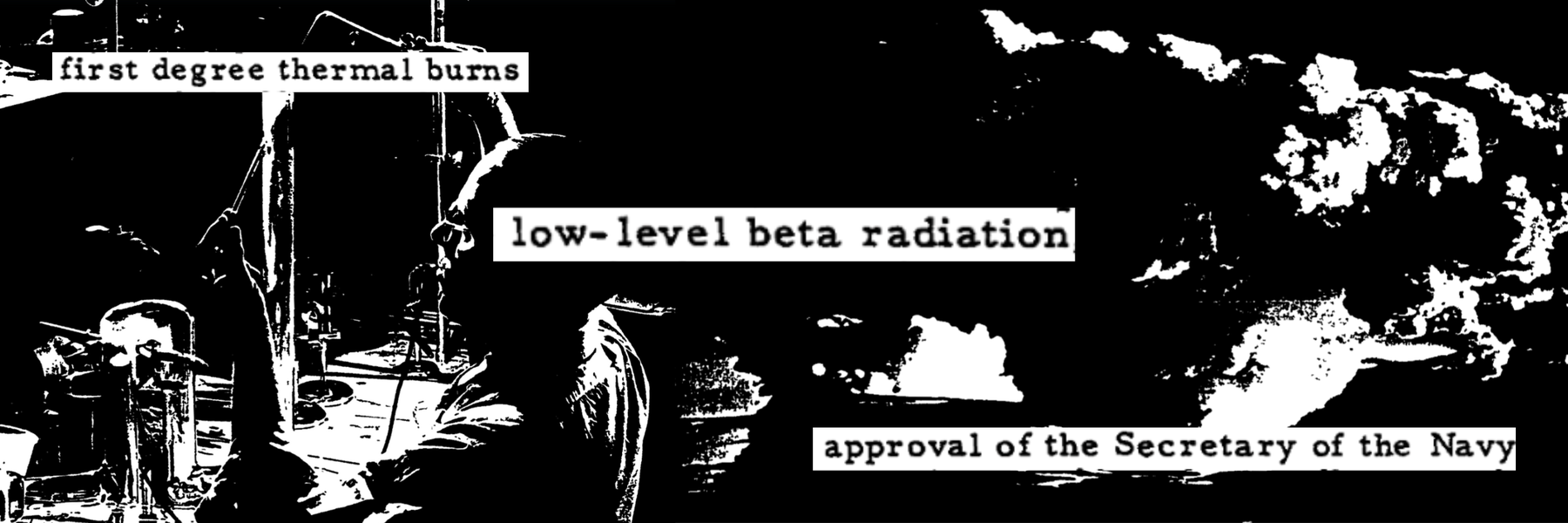

A heavily redacted document — Carter Page’s FISA warrant is a legal filing with a lot of redacted portions.

-

Something historical — Executive Order 9066 authorized the internment of Japanese Americans in 1942. The scanned image available in the national archives is fairly high quality but it is still an old, typewritten document.

-

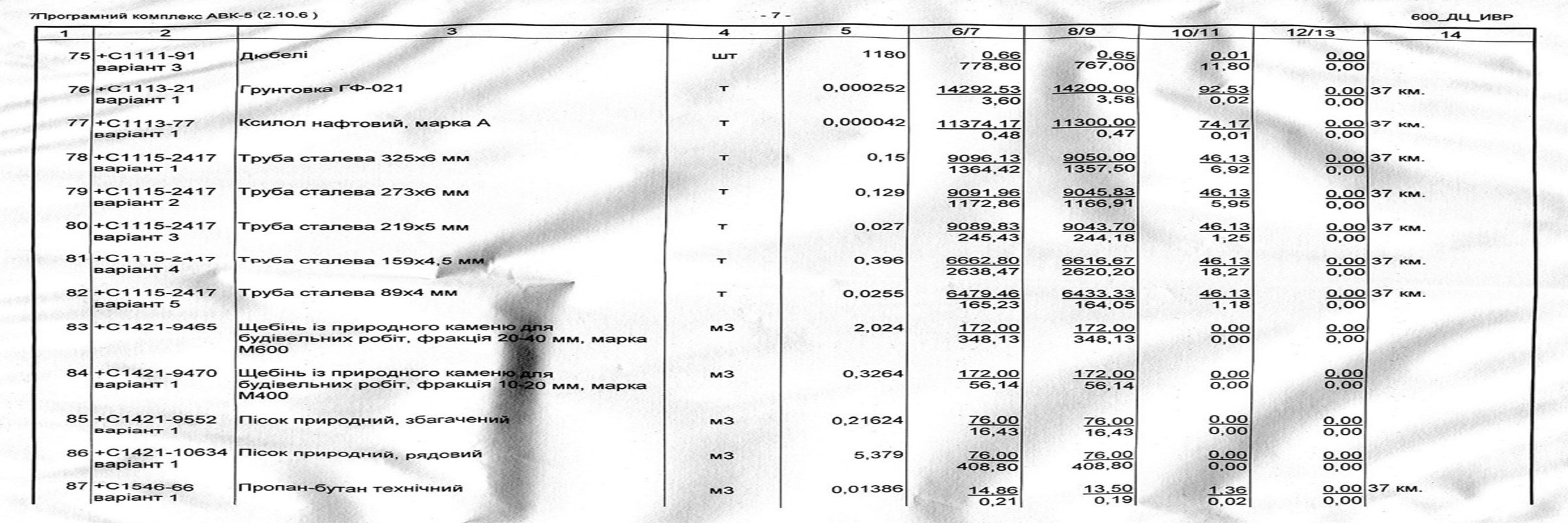

Something damaged— in early 2014 a group of divers retrieved hundreds of pages of documents from a lake at Ukrainian President Viktor Yanukovych’s vast country estate. The former president or his staff had dumped the records there in the hopes of destroying them, but many pages were still at least somewhat legible. Reporters laid them out to dry and began the process of transcribing the waterlogged papers. We selected a page that is more or less readable to the human eye but definitely warped with water damage.

-

A screenshot of a text message conversation — January 6 Commission documents, which are all available in a dedicated DocumentCloud project, include a handful of screenshots of text conversations. The screenshots have text in bubbles and include some overlays that make them surprisingly tricky to accurately OCR.

-

A form that contains plenty of handwritten text — Campaign finance disclosures are often filled out by hand and with varying degrees of legibility. A newsroom that wants to do any kind of analysis of data contained in a collection of forms has to start by extracting each form into a spreadsheet or other data store.

-

An acknowledgement letter that contains a signature and seal — Signatures are a special case for handwritten text and circular seals are a unique challenge on their own. We chose an acknowledgement letter for a public records request filed on MuckRock that contains both.

-

A clean, machine generated PDF written in simplified Chinese — Many OCR tools do not support non-Latin languages. Those that do produce inconsistent results with Asian languages. The preface to The Complete Works of Marx and Engels offered a clean and straightforward test for support of Chinese characters.

-

A multilingual document featuring handwritten text — Within the declassified CIA CREST Archive released by the MuckRock team, we came across a crude scan of a document in which the CIA was actively seeking issues of Chinese Communist newspapers dating from 1961 onwards. Within this document, one can find the name of each publication written in traditional Chinese characters, pinyin (the Latin alphabet transcription of Chinese), as well as English text. This document poses several challenges for OCR systems, including support for non-Latin languages, dealing with rough scans, and recognizing handwritten text. Adding to these challenges, we intentionally refrained from providing any language hint code to the OCR systems, in order to test their ability to distinguish between simplified and traditional Chinese.

What about layout?

All of the documents included in this review are organized into a DocumentCloud project. The output of each OCR system results in a plaintext file representing the full document text, which you can review in our embedded OCR viewer below or on DocumentCloud by clicking on the project and switching the view in the bottom left to “Plain Text.” You can see the raw resulting txt file in our OCR viewer or by clicking on “Original PDF” on any document and changing the extension in the URL from .pdf to .txt. For example, the URL for the raw plaintext for Carter Page’s FISA warrant is https://s3.documentcloud.org/documents/23989721/carter-page-release-9-november-2018-doctr.txt

Each page of the document has its own page text that can be accessed. Positional coordinate information for each word on a page in JSON format is also available. Both the page text and the JSON position information for each page can be accessed programmatically using the DocumentCloud API. We have provided some example code using the Python wrapper for the DocumentCloud API on how to access these static assets.

The full document text, individual page text, and positional JSON is available for all documents whether you use one of the Add-Ons or the native user interface to access Tesseract or Textract.

Review: Free and open-source options

Tesseract

Tesseract is a free and open-source command line OCR engine that was developed at Hewlett-Packard in the mid-80s, and has been maintained by Google since 2006. It is well documented. Tesseract is written in C/C++. Their manual is reasonably comprehensive.

Tesseract is available natively on the DocumentCloud front end and is the default OCR engine for documents that do not have an extractable, underlying text layer.

Tesseract’s strengths are its support of a wide variety of languages and its ease of setup and use. Tesseract struggles with documents that are not clean, machine-generated documents including scanned documents, handwritten text, and redactions.

docTR

docTR is a free and open-source end-to-end OCR library powered by TensorFlow 2 & PyTorch. Open sourced by Mindee, a company that offers custom OCR options for pre-configured forms and documents as well as customizable options, it is mostly written in Python and is regularly maintained. The installation instructions are straightforward. Although docTR relies on machine learning models, a GPU is not required, and it can run off of the CPU-only version of PyTorch directly from their site. In fact, the docTR DocumentCloud Add-On runs using only the CPU as GitHub Actions does not yet support free GPU runners.

docTR is available with no setup as a free DocumentCloud Add-On, which you can use if you have a verified DocumentCloud account to OCR your documents.

docTR performs better than Tesseract on many document types it struggles on: scanned documents, screenshots, documents with strange fonts, etc. docTR’s recall and precision using some models are much better than Tesseract and even some of proprietary cloud-based services as demonstrated in their benchmark table. docTR, however, does not support handwriting, which all of the cloud services provide, and it also has less language support than Tesseract. Of all the OCR models we reviewed, we found docTR to be the most beginner-friendly and intuitive in both its setup and use. We think that with the addition of support for handwritten text, docTR would give the cloud services a run for their money.

Review: Cloud services

Amazon Textract

Amazon Textract is a relatively new service that allows you to perform OCR, unearth tables from documents, and more. Textract did not appear in our first review of OCR tools, as it was in beta at the time and we were not granted access. Textract is built into DocumentCloud’s user interface natively. You can select it as an OCR engine upon upload or by clicking Edit -> Force Re-Process and selecting Textract as well as checking the Force OCR option. Textract OCR on DocumentCloud does however require that you have DocumentCloud premium, which grants you access to AI credits which you can use to OCR your documents, use AI based Add-Ons, or translate your documents.

The cost for Amazon Textract is similar to all of the other Cloud services, $1.50 per 1,000 pages of detected text and more if you intend on using the service to extract tables or forms ($15.00 per 1,000 pages). The price for text detection drops to $0.60 per 1,000 pages after 1 million pages, the same as Microsoft’s and lower than Google. The first 1,000 pages are free.

Textract performed admirably on tasks that Tesseract struggles with, like extracting handwritten text, text from scanned and rough documents, etc. Textract, however, struggles in its lack of support for non-Latin languages, which in our opinion puts it behind both Azure Document Intelligence and Google Cloud Vision. Independent setup to use Textract, though a bit cumbersome, was much easier than Google Cloud Vision.

Google Cloud Vision

Google Cloud Vision API allows you to transcribe text and other objects (faces, labels, landmarks) from PDFs, TIFFs, image files, etc. GCV is integrated into DocumentCloud as a premium DocumentCloud Add-On.

The cost for text detection in the Google Cloud Vision API is similar to both Amazon Textract and Microsoft’s Azure Document Intelligence, where it costs $1.50 for 1,000 pages. The first 1,000 pages are free. The price drops to $0.60 per 1,000 pages after 5 million pages. The limit for the cheaper rate is much higher than Amazon and Microsoft’s 1 million pages. New customers do get a $300 credit to use, which equates to about 200,000 pages of OCR. Depending on the size of the document set you’re looking to OCR, this could be something to push Google in the advantage or leave it behind. The break-even point is right around 1.3 million pages, where Google’s $300 credit no longer compensates for the pricing increase after a million pages in comparison to Textract and Azure.

Google Cloud Vision performed admirably on all document sets. Google’s language support is also on par with Microsoft and Tesseract—better than Amazon’s Textract and docTR, which do not support non-Latin languages. It even performed admirably on our most complicated document sets with multilingual text and handwriting, all without providing language hint codes.

Most notably, it pulled the most text from the blurry seal on the top of the acknowledgment letter, nearly getting it all—“CITY OF ROUGE PARISH OF EAST BATON ROUG”—which was more than even Azure detected. It did also make mistakes. On the campaign finance disclosure document it detected a superscript number in an email address meant to be in normal script and in previous tests before redaction, it interpreted some handwritten text as Cyrillic, an entirely different character set. Both Google Cloud Vision and Microsoft’s Document Intelligence misidentified traditional handwritten Chinese as simplified Chinese characters without a language hint code in the multilingual document, but the OCR’d characters provided similar translations.

Of all of the free and proprietary options in this review, however, Google performed the worst on ease of setup and use. The author of the Google Cloud Vision Add-On is familiar with the Google Developer console from writing scripts to interact with the Google Drive API and writing the Translate Documents Add-On, but it hardly makes the console less clunky. The program to send the API requests and get results was the longest of all of the cloud services. This is because it requires the use of asynchronous requests and the use of Google storage for processing. Google storage buckets also cost money over time, so if you decide to go DIY, you will have to be mindful of clearing out the bucket over time or you will accrue those costs too.

Overall, in comparison to the setup of an S3 bucket or an Azure resource, setting up the Google buckets just to interact with the document text detection left something to be desired. Finally, to create the position information for each word detected by Google, we had to join the text of each of the symbols in a given word to recreate the word and then calculate the bounding box. We didn’t have to do this for any of the other models, which provided position information for each word in its entirety.

Microsoft Azure AI Document Intelligence

Microsoft’s Azure AI Document Intelligence can be used similarly to Textract in that it has different models for text detection, table extraction, common form extraction (W2s, passports, etc), custom forms, etc. Azure Document Intelligence is integrated into DocumentCloud as a premium Add-On.

The cost for basic text detection for using Azure is also $1.50 for 1,000 pages. The first 1,000 are free. After a million pages, the price drops to $0.60 per 1,000 pages, similar to Textract.

Azure performed admirably on all of the document sets. It even pulled non-visible copyright text it detected in hidden layers of the preface to Marx, which was a bit surprising. It performed well on Cyrillic, simplified Chinese, and even correctly identified some of the traditional Chinese characters in the multilingual handwritten document, although it made mistakes. In comparison to Amazon’s Textract, it supports more languages.

Where we think Azure shines above some of the other cloud services is the lack of friction to get started. It was the easiest to set up and to kick the tires on wrapping it into an Add-On. Microsoft includes plenty of documentation. There were fewer clicks and billing steps to get started on Azure than the Google Developer console. The credential information was easily and readily accessible once a resource was created.

Where we think Azure struggles is its sensitivity and erratic formatting. We had to write some code to strip out extraneous lines of text that only contained dashes, periods, semicolons, and commas that clearly were not present in the text. This isn’t that big of a deal, but was not necessary with any of the other models. Similar to our last OCR review, it handles column-based information on the receipt much less smoothly than other models like Textract, which seemed to handle this case the best of the three cloud services.

All the results in one place

You can flip through our viewer to see how each tool did with each of the documents.

Tools we skipped

Unfortunately, the pricing transparency is just not there anymore. In our last review we listed prices up front. The Abby team won’t even share a quote without a request, and that’s usually a sign that their tools will be out of reach for most newsrooms.Their quick start guides do seem to be pretty straightforward once you are approved, and in our previous review we noted that their platform was easy to use and set up. We also could no longer find any mention of their desktop app, which used to be available without page restrictions for $200. Pricing appears to have shifted to cloud services only.

We initially planned to test Calamari again, but quickly confirmed that it didn’t perform as well as Tesseract or docTR. Combined with the fact that the current release is over a year old, we decided to skip Calamari.

OCRpus appears to have been archived.

As with Calamari, we quickly confirmed that Kraken wasn’t any better than the free or cloud-based services available. And it does not support Windows, which limits its usefulness.